Mitigating hate speech online using AI

Oct 8, 2024

Summary:

In tackling the challenge of mitigating hate speech online, I explored how AI can provide a more effective approach than traditional content removal. Although removal efforts are essential, they often fail to address underlying issues and may lead to further divisiveness. To bridge this gap, I researched how AI could be used to offer nuanced, empathetic counter-responses that encourage constructive change in behavior while protecting users from the emotional toll of addressing hate speech.

Through this exploration, I identified a few actionable strategies for social media platforms: enable features like message sentiment analysis while typing, provide context around flagged content, and offer safe, guided responses to encourage positive engagement. Incorporating these features would help align platform policies with user well-being, making combating hate speech less emotionally taxing and empowering more users to participate in counter-speech efforts safely and effectively.

Employing AI to reduce the emotional toll of combating online hate speech.

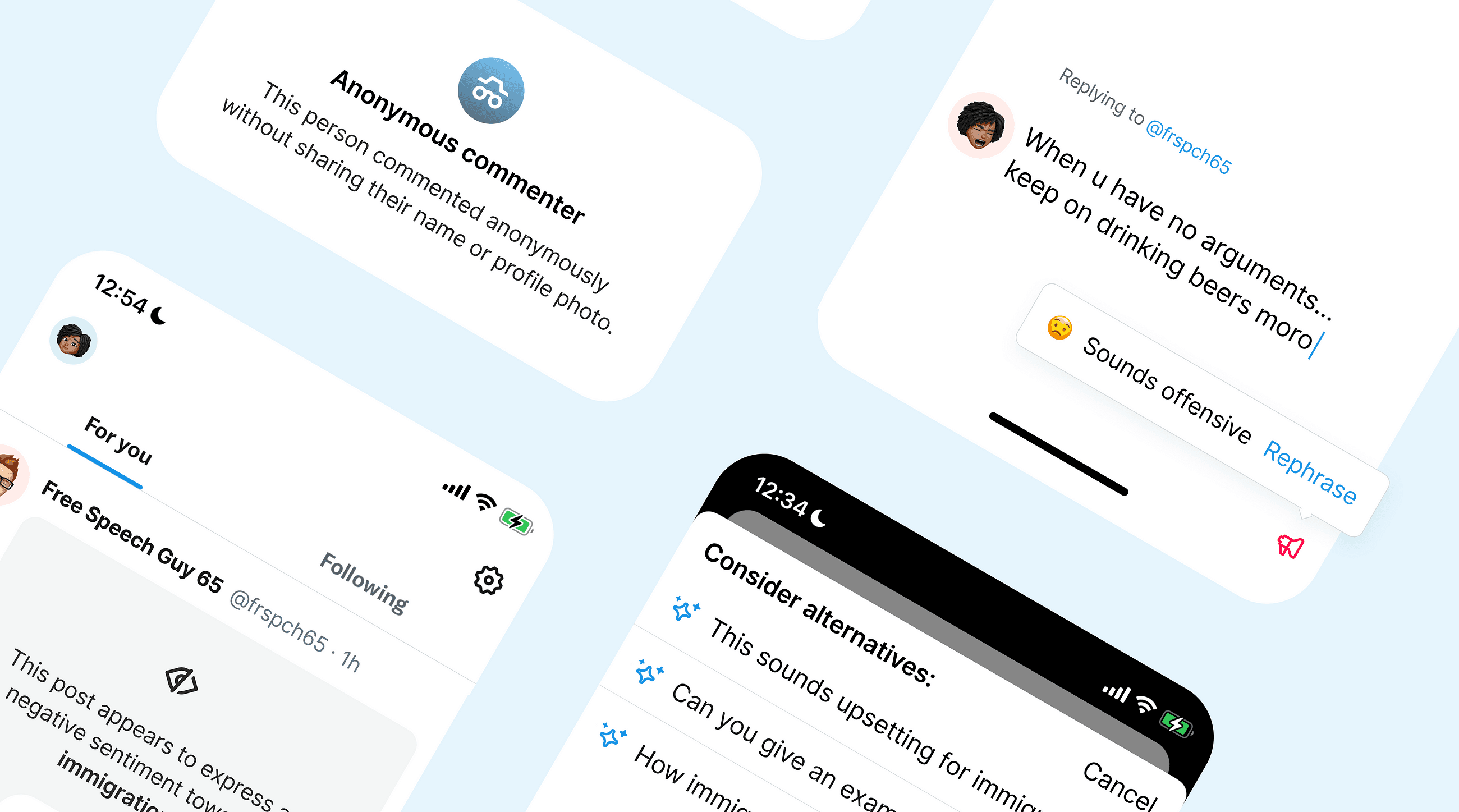

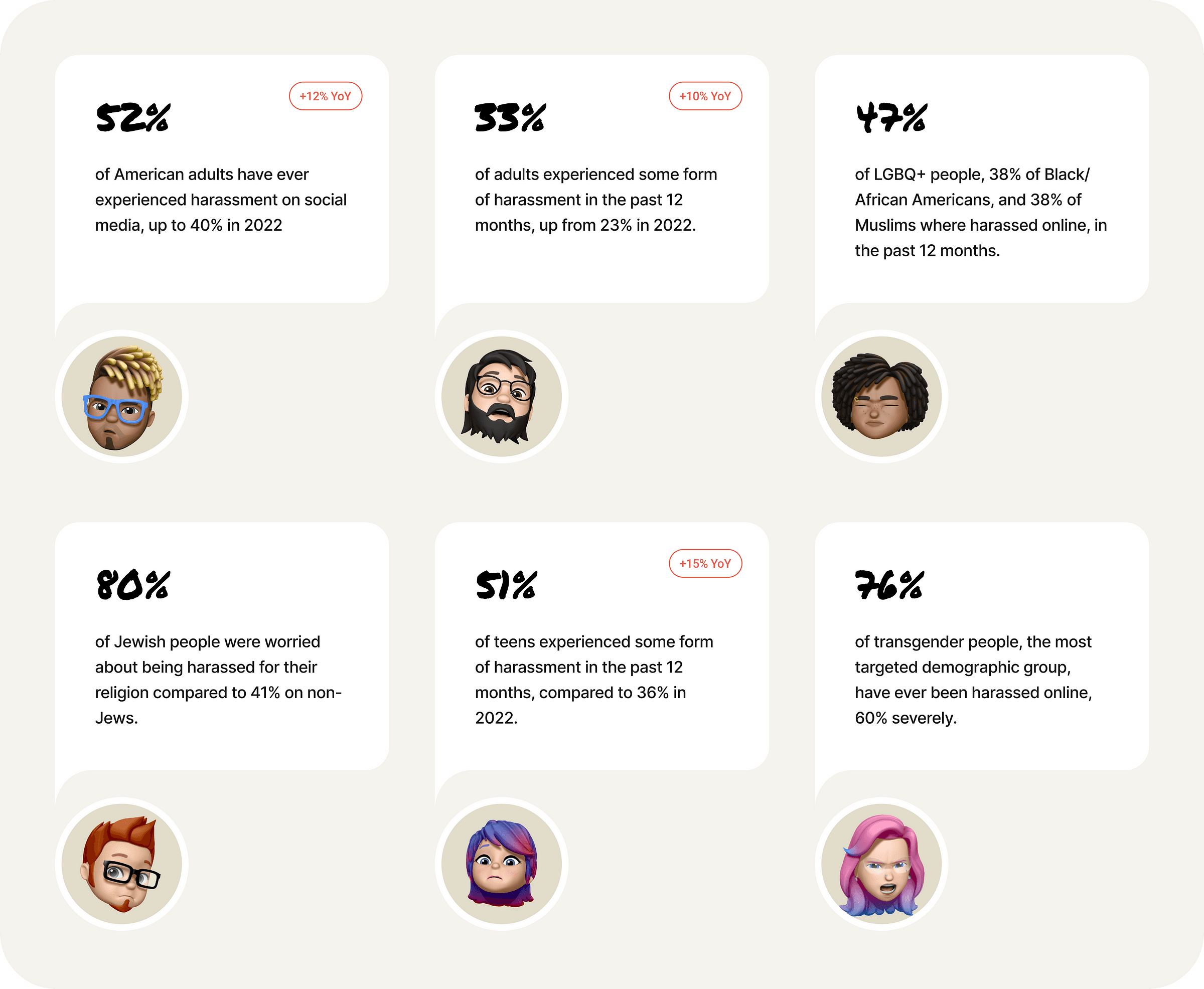

Key Findings from Online Hate and Harassment: The American Experience 2023 report.

Online hate speech has real-world consequences, including hate crimes and physical attacks. Research shows that exposure to online hate speech weakens empathy [1], which may lead to more criminal behaviour [2].

Data reported by the US [3] [4] [5] [6], the UK [7], and European countries [8] indicate that in recent years, online hate speech has exploded, and hate-related crimes are at a record high.

Most of the hate speech still occurs on mainstream social media platforms.

![Top 3 platforms on which respondents experienced online abuse (percentages — multiple answers). [9], p.24](https://framerusercontent.com/images/K3hRVbK9PRvWEaOQwhhmUR5sl9U.png)

Despite tech companies’ commitments to making their platforms safe, hate speech continues to find its way onto major platforms [9] [10] [11].

During the COVID-19 pandemic, there was a spike in hate speech targeting women, with the majority of the abuse occurring on mainstream social media platforms like Twitter (Current X), Facebook, Instagram, WhatsApp, Slack, and Snapchat.

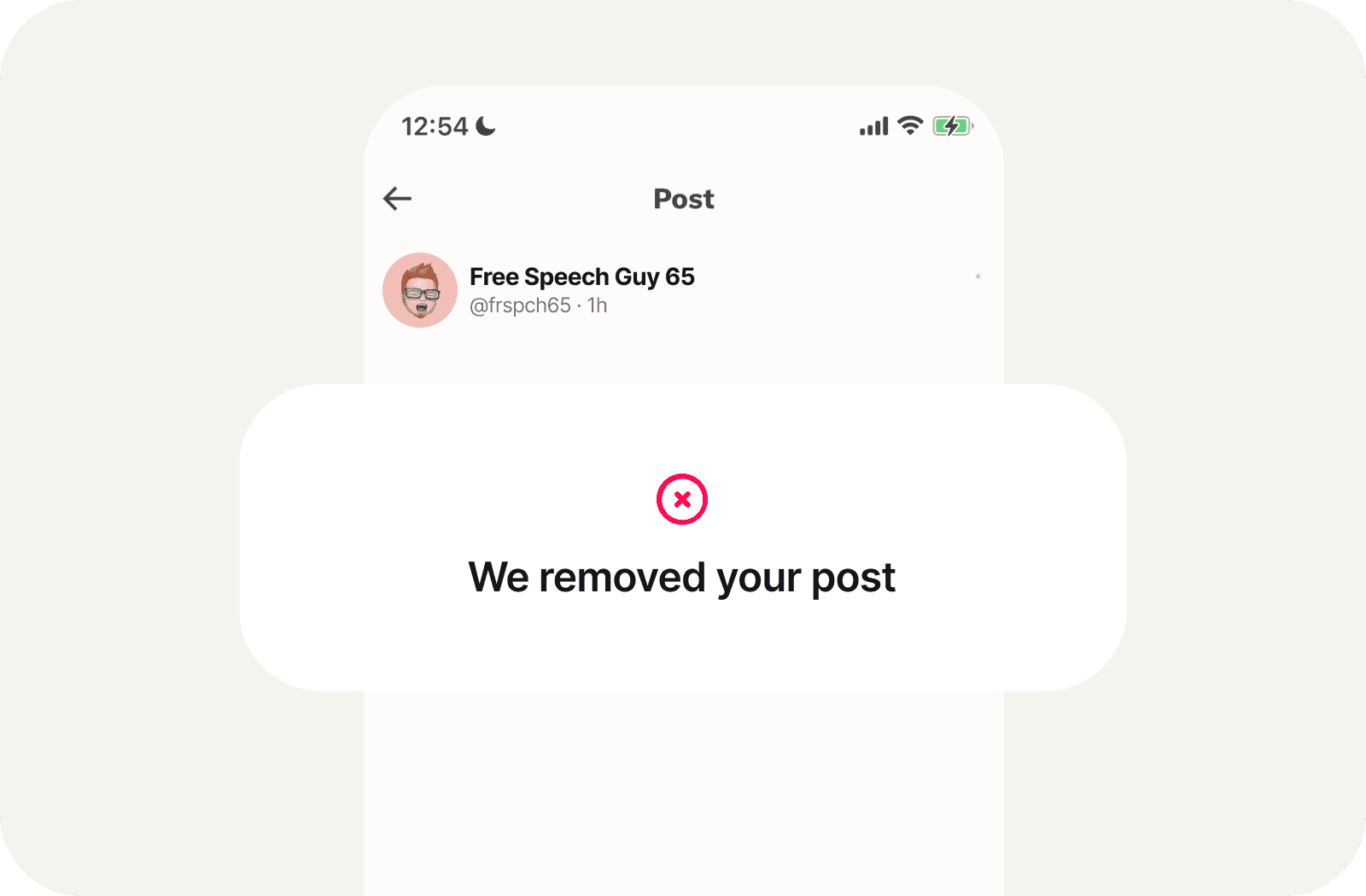

Social media platforms claim to take action, but there are caveats.

Social platforms report record numbers in the removal of hate speech [12], yet their commitments are not always consistent [13] [14] [15].

In addition, content removal serves to fracture and polarize the internet [16] [17]. As the UN Secretary-General puts it, ‘Social media provides a global megaphone for hate’ [18].

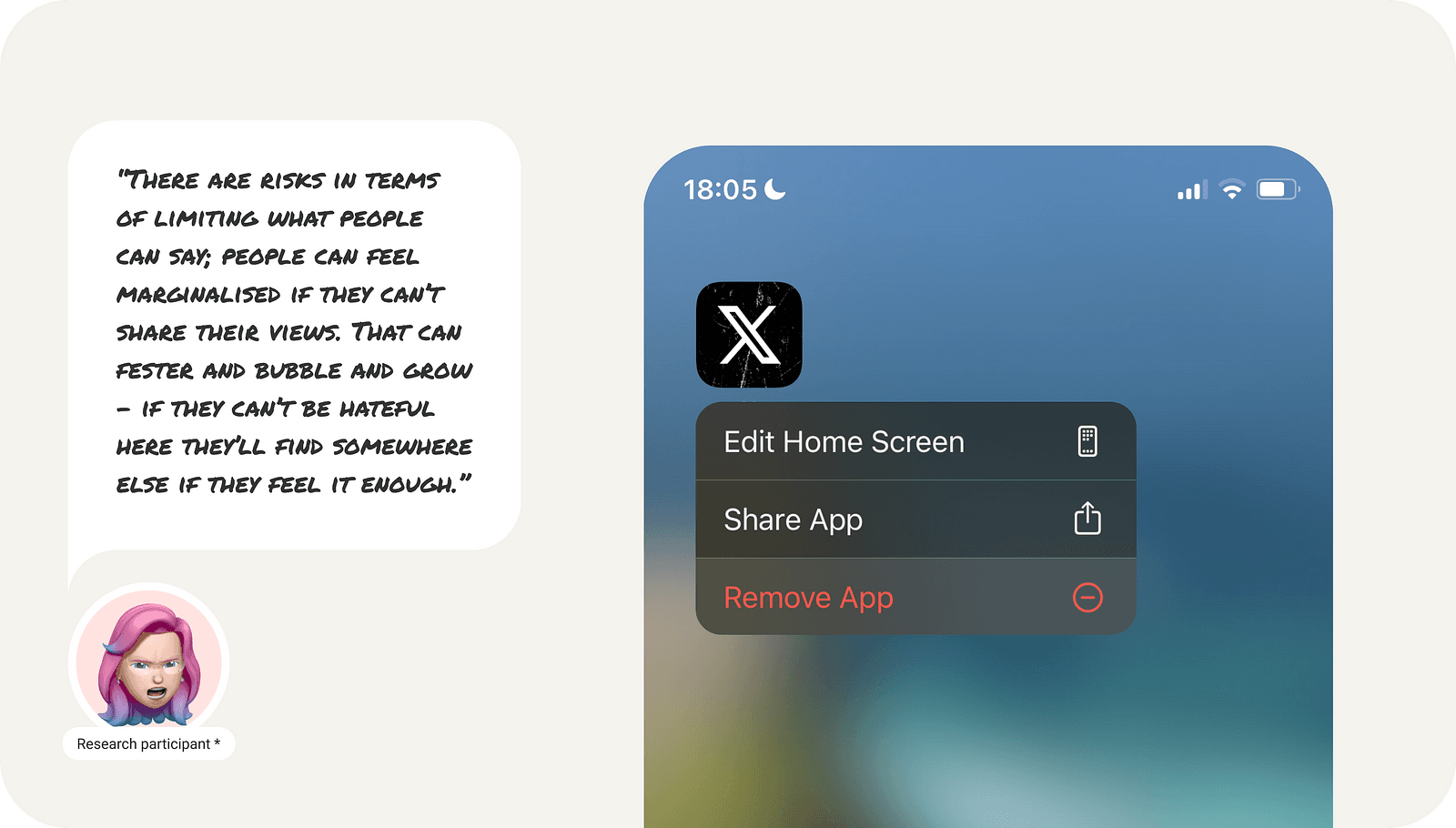

Taking down content hardly changes the minds of people who spread hate.

* [19] Online hate and freedom of speech. Qualitative research into the impact of online hate. Page 39

Straightforward moderation hardly changes the minds of those who speak hatefully [19]. Instead, they might ‘go underground’, sharing hate speech in other online places [20] [21].

The shooter who attacked a synagogue in Pittsburgh used Gab to share antisemitic hate speech and hinted at his plans before he killed eleven people and injured six more [22].

Research indicates empathetic responses can be used to alter offender actions.

Counter-speech messages that generate empathy for hate speech victims are more likely to convince senders to change their ways [23] [24] [25].

Hate speakers are 2.5x more likely to delete their hateful comments when confronted with empathetic counterspeech.

Researchers discovered that messages sparking empathy were 2.5 times more effective than humor or warnings of consequences in getting authors to remove offensive comments.

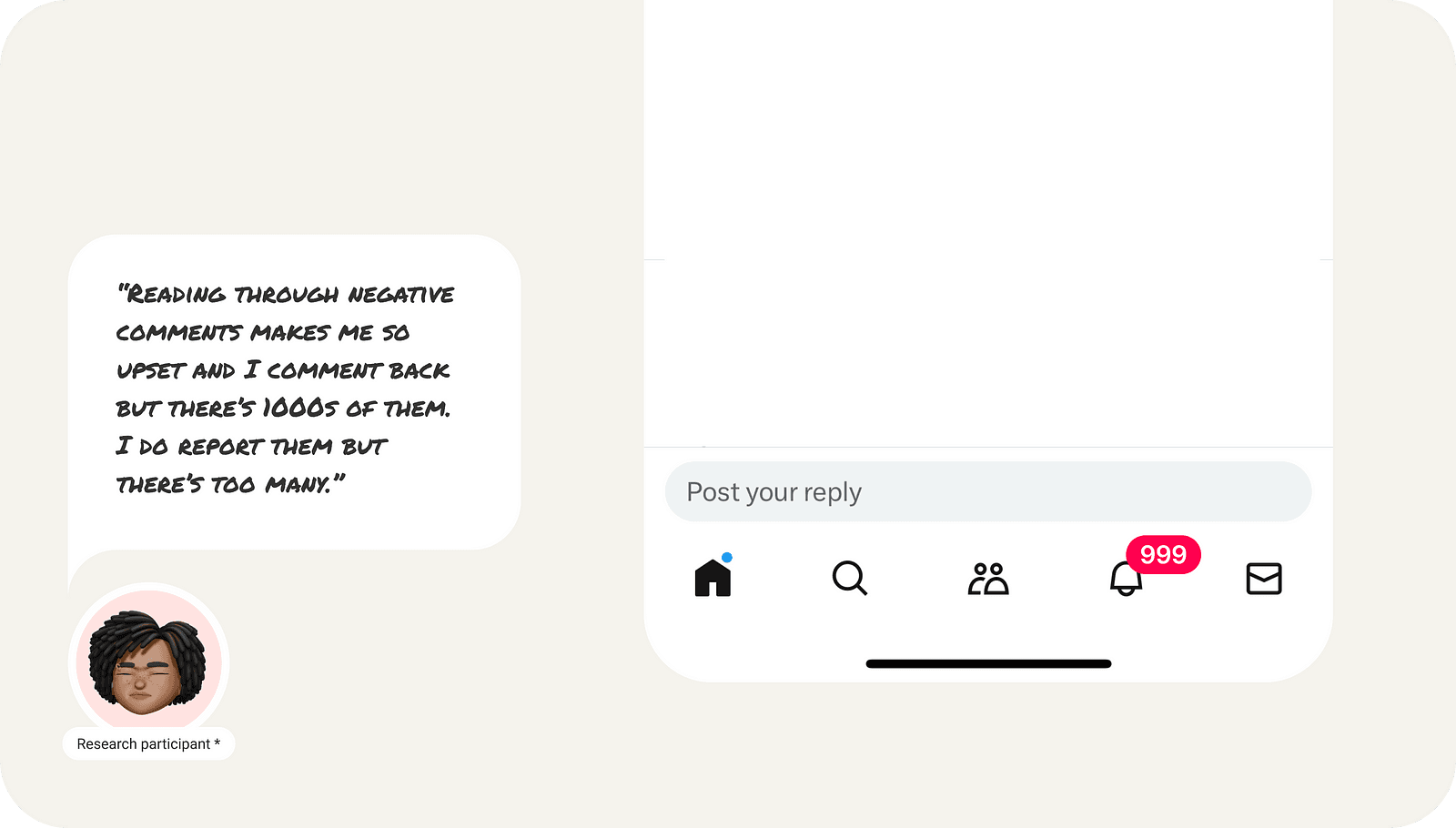

Although empathic counter-speech can be effective, it has its downsides.

The main reason people are hesitant to engage in counter-speech is that it can be emotionally taxing and potentially risky [24].

* [19] Reactions and coping behaviours. Qualitative research into the impact of online hate. Page 27

Counter-speakers often become targets of online attacks themselves, and being empathic towards offenders makes it even harder.

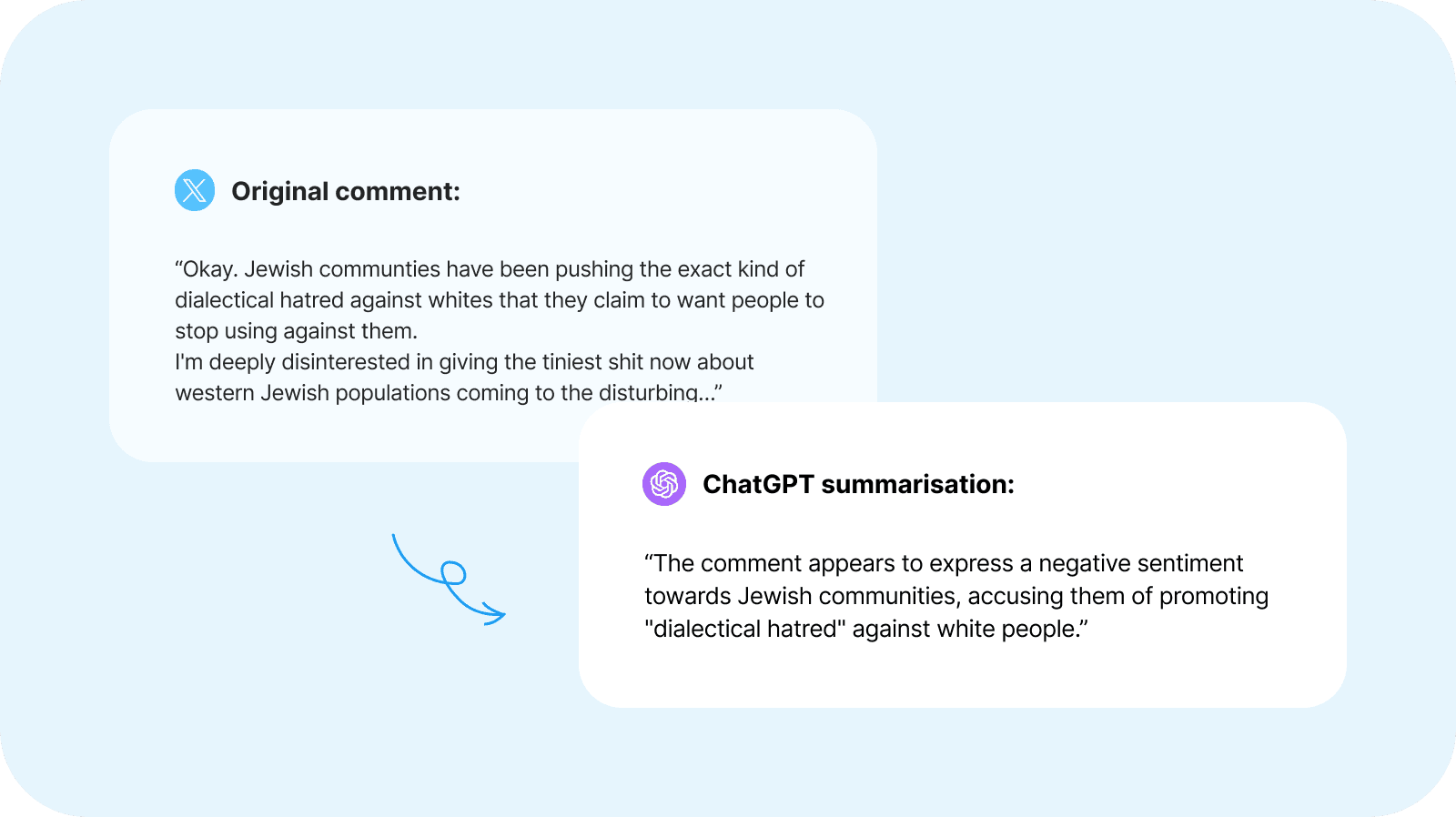

Current LLMs are capable of eliminating the downsides associated with empathic responses.

LLMs like ChatGPT can understand the language semantics of comments and provide context for hate speech.

ChatGPT was prompted to estimate sentiment of a hate speech comment.

They can also understand human biases involved and generate empathic replies for effective counter-speech.

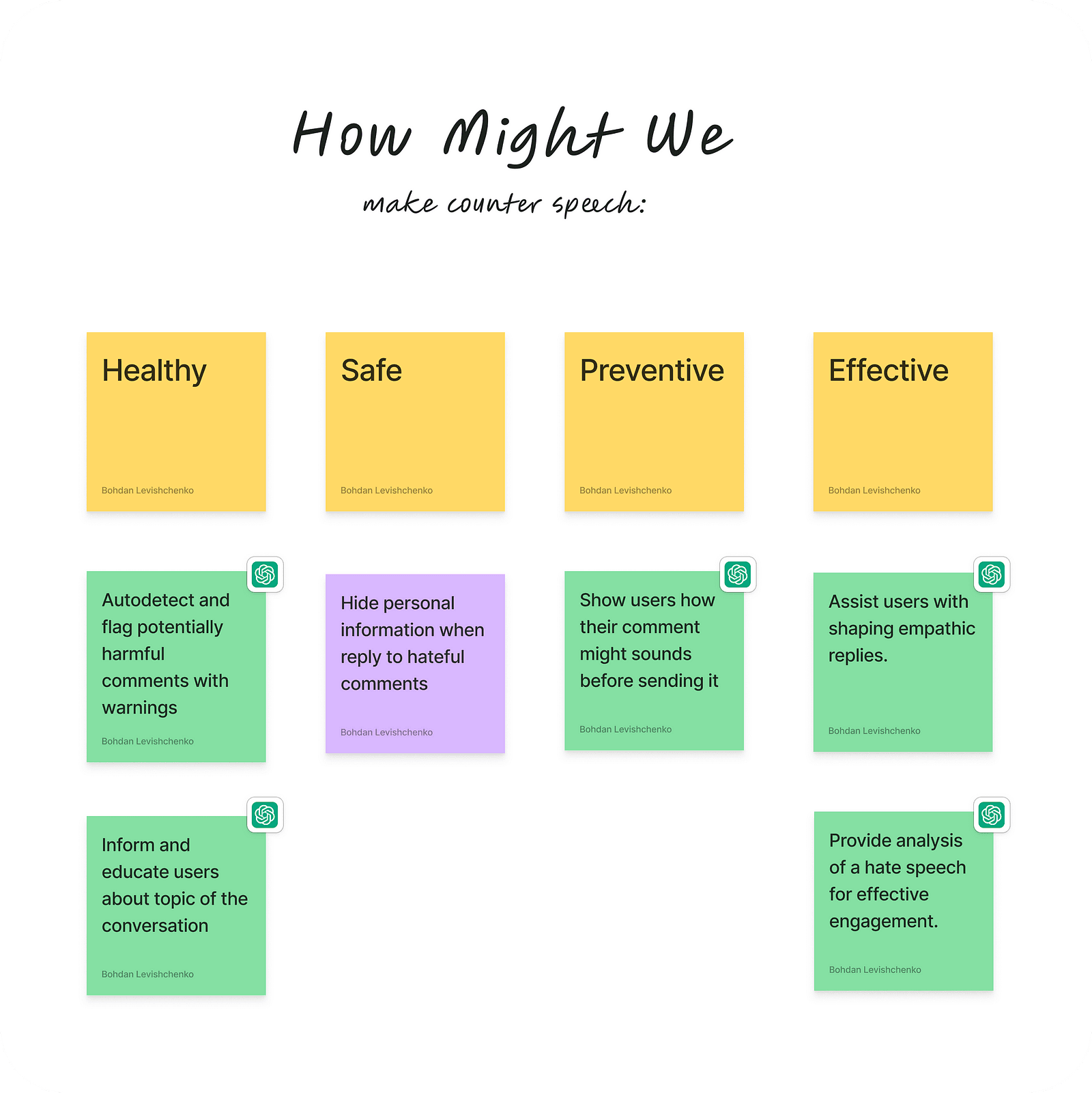

How can social media platforms use LLMs to mitigate hate speech?

There are a few aspects that social media platforms can address to help reduce the downsides associated with empathic responses.

Emotional health, physical safety, self-control, and guidance are the main themes in hate speech research.

Make combating hate speech less emotionally taxing.

There is a substantial number of people willing to combat hate speech online. Social platforms can support these individuals by flagging instances of hate speech.

Providing context to hate speech may play a crucial role in addressing hate speech.

Big social media platforms already place sensitive content, including nudity, violence, and other sensitive topics, behind a content warning wall, but this does not extend to hate speech.

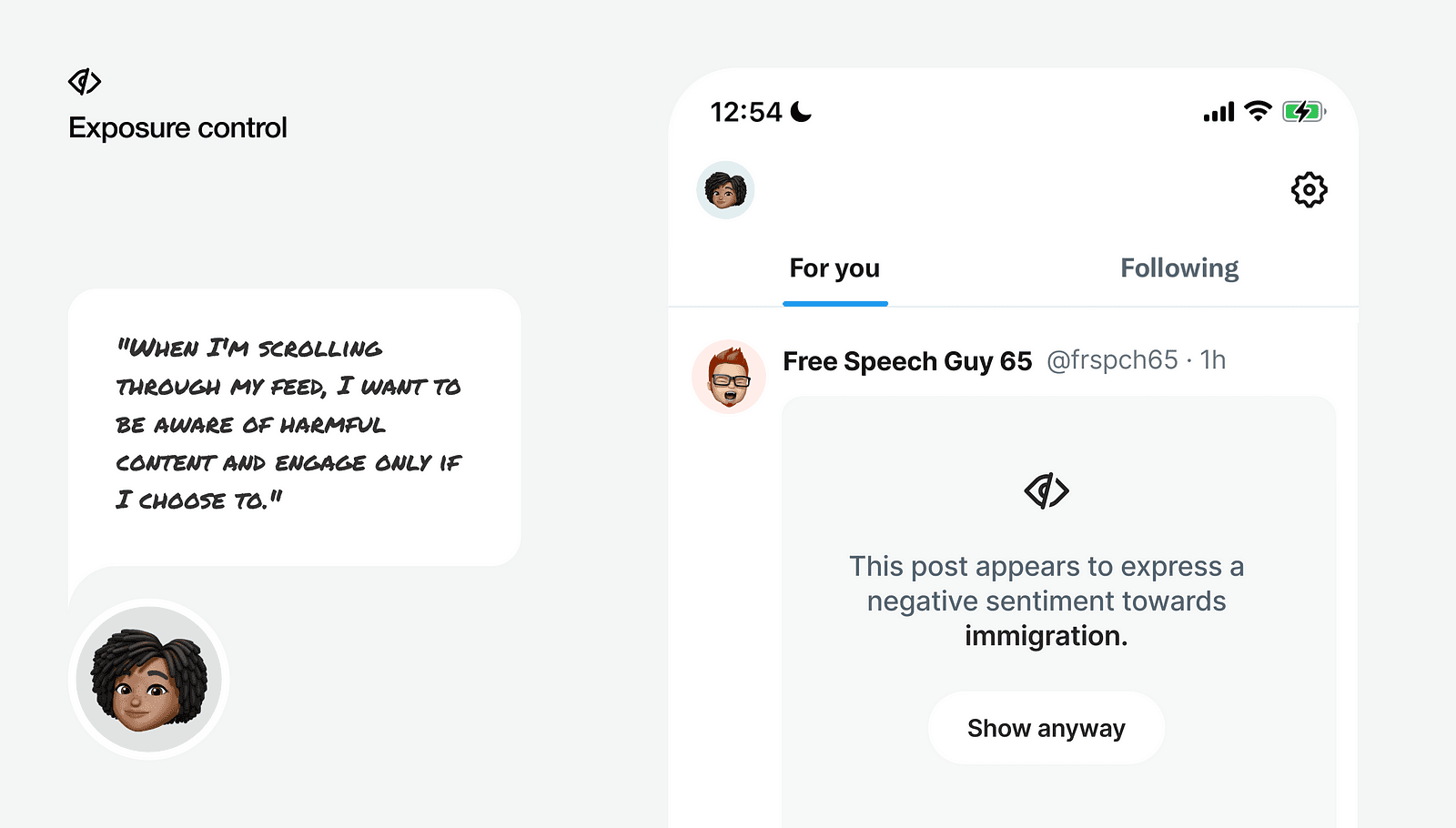

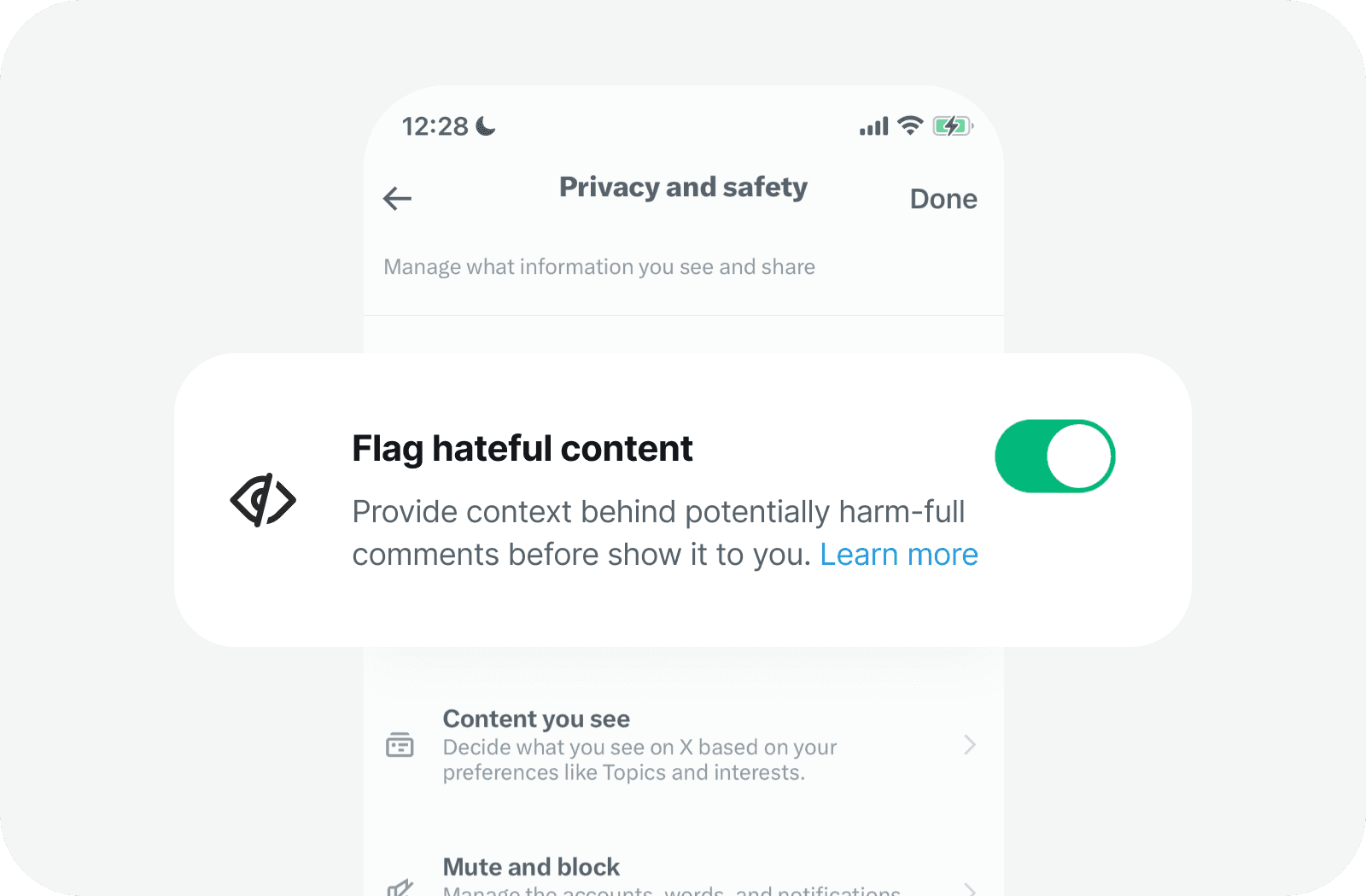

Hate speech content flagging settings.

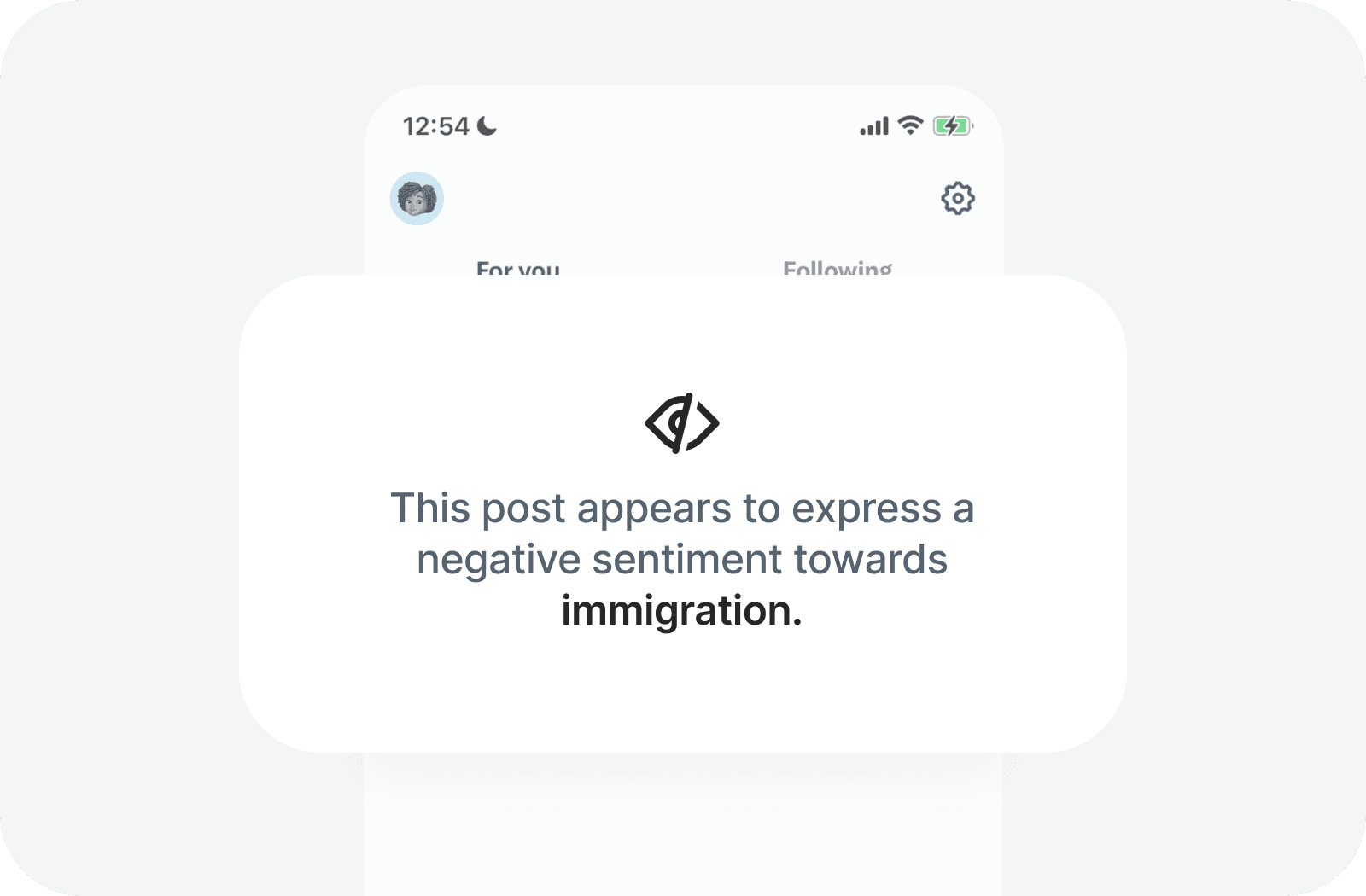

Providing context for harmful content can protect people from excessive exposure to hate speech and help them choose their battles more wisely.

Flagged hate speech content in the feed.

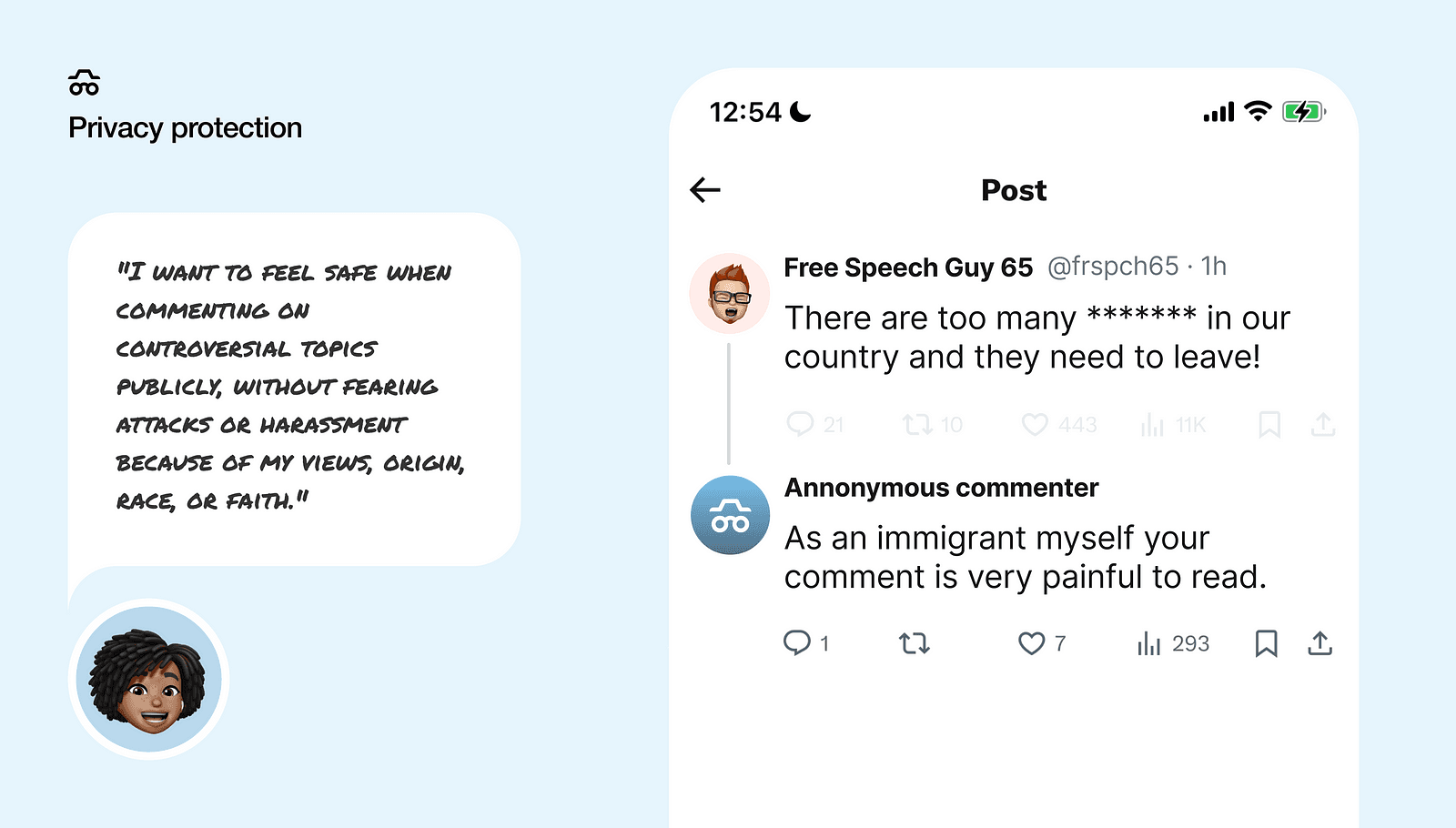

Protect people who engage and speak up.

Furthermore, people might be more inclined to join efforts in combating hate speech if they feel safe while commenting on it.

Safety and privacy features to protect against stalking.

Social media platforms like Facebook already implement features to protect personal information [26]. They could take a further step by extending these policies to combat hate speech.

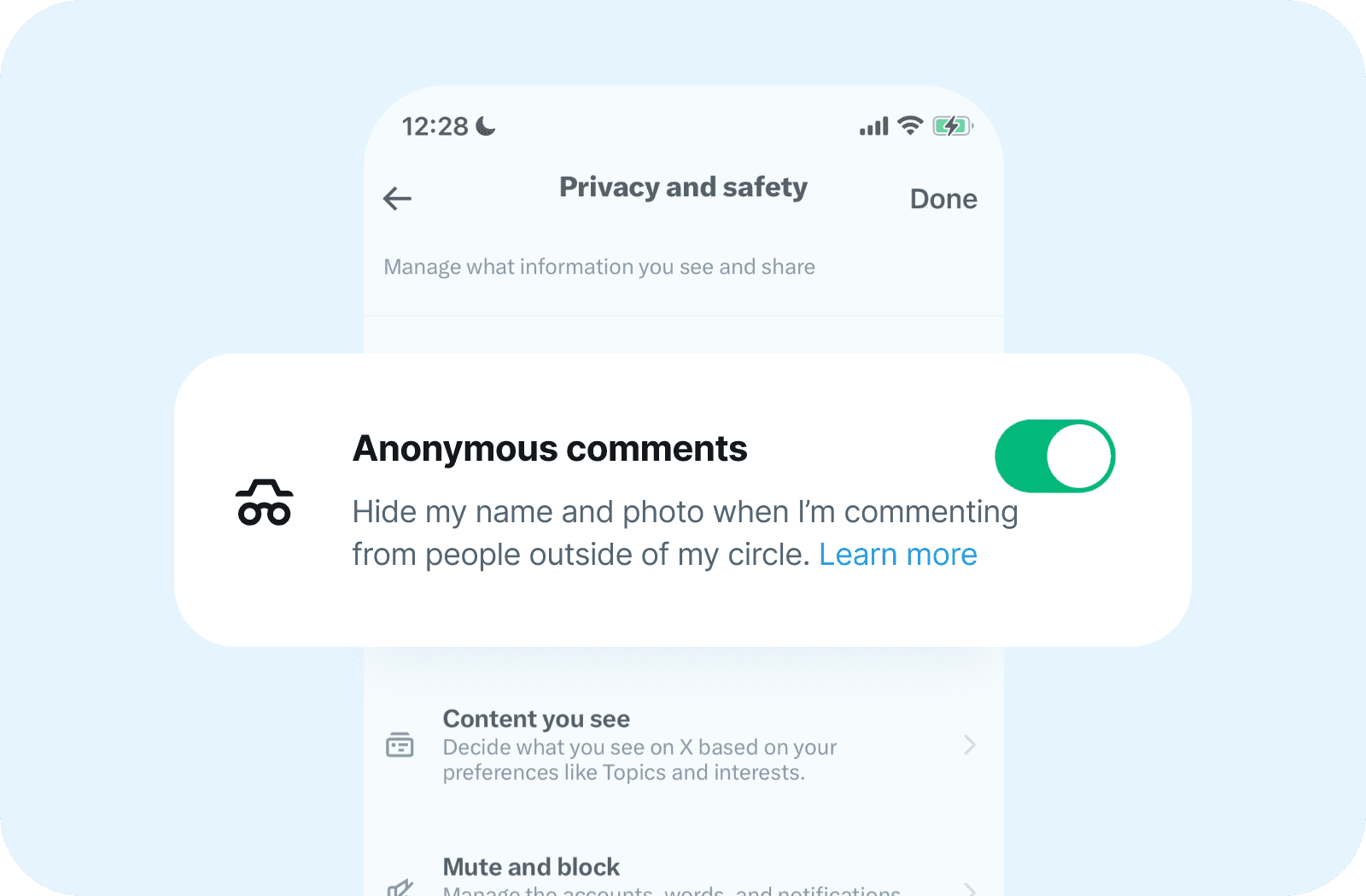

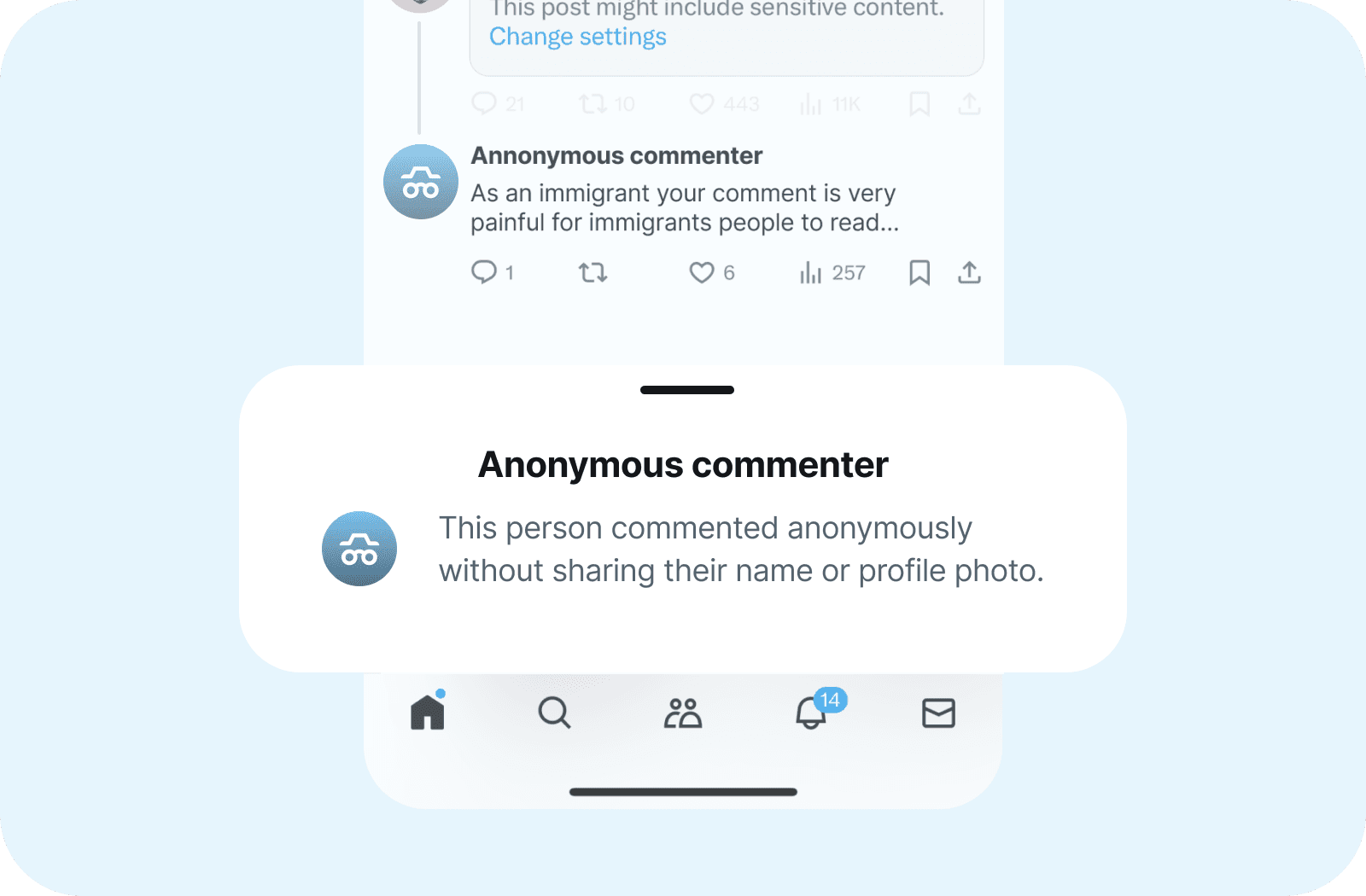

Anonymous comments settings.

Hiding personal information from people outside of your circle, especially when commenting on hot topics, might protect individuals from vulnerable social groups and empower them to speak out.

Anonymous comments view in the feed.

Provide guard rails for emotional swings.

People often use hate speech to relieve tension and frustration [27 p.5]. The Gallup Global Emotions Report showed that one in three people worldwide felt daily pain in 2023 [28]. Apparently we all can participate in hate speech time after time.

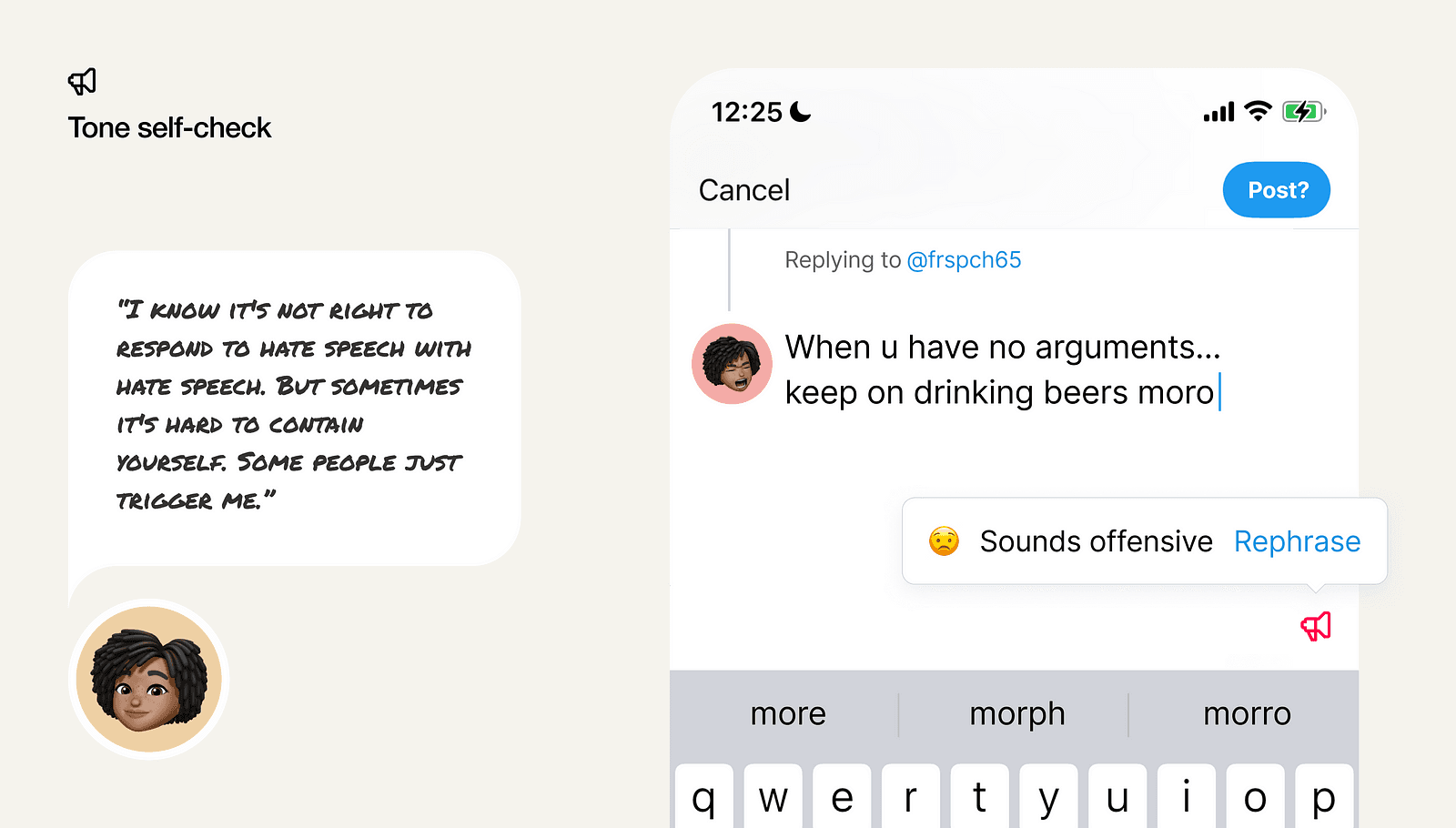

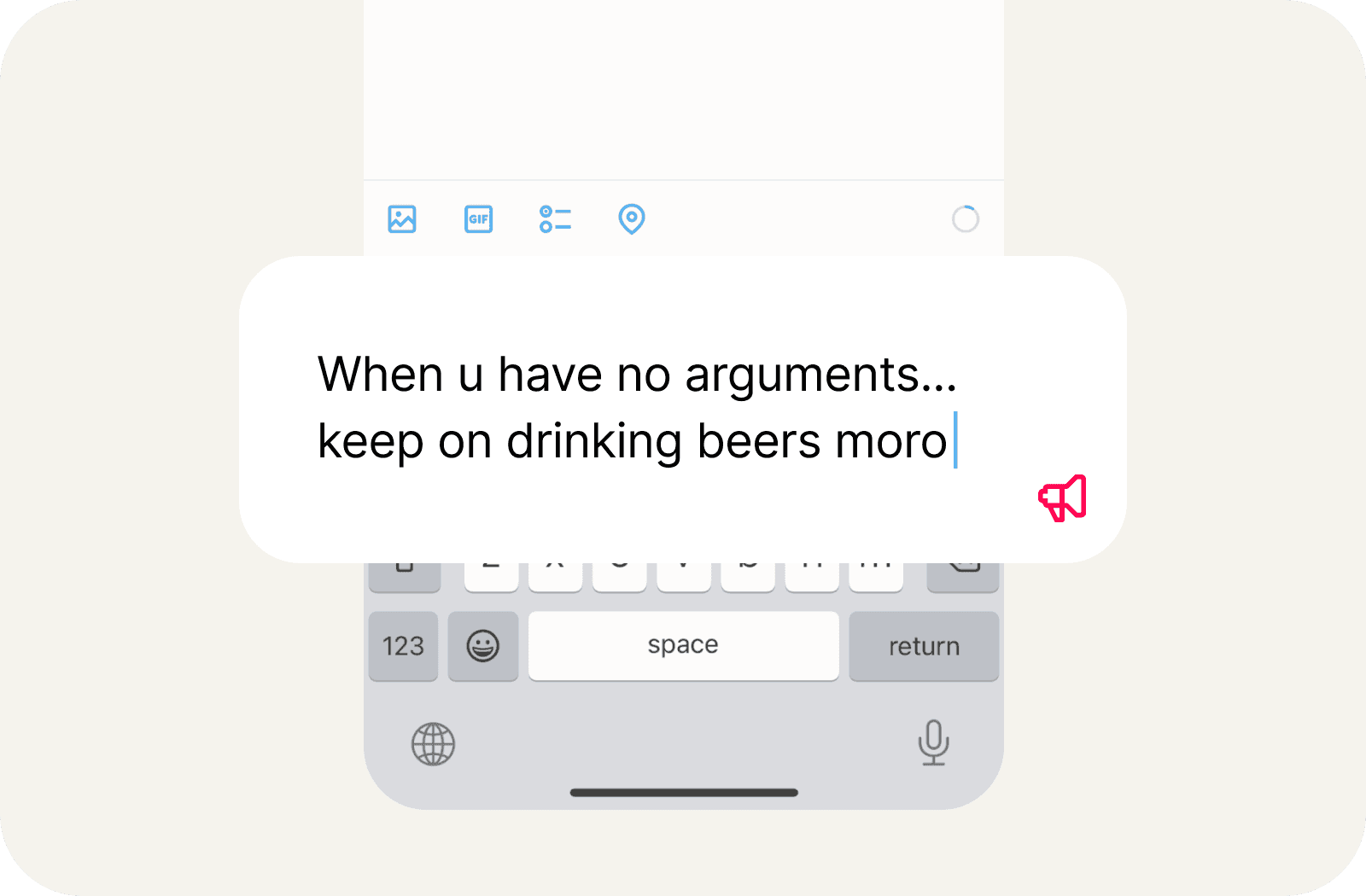

Emotional self-check feature.

Companies like Grammarly can analyze message sentiment in real-time [29], an approach that social media platforms could adopt to help prevent hate speech.

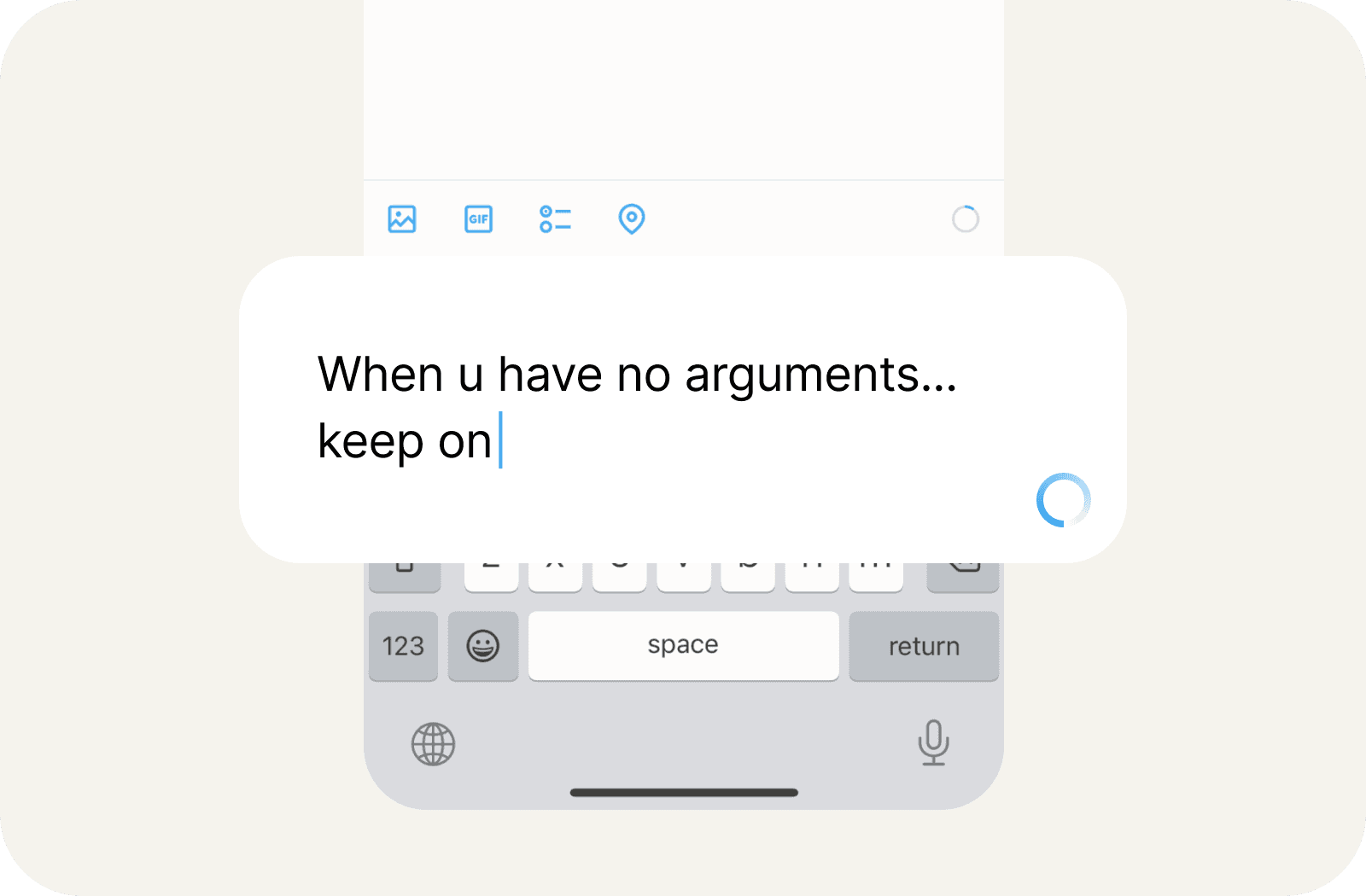

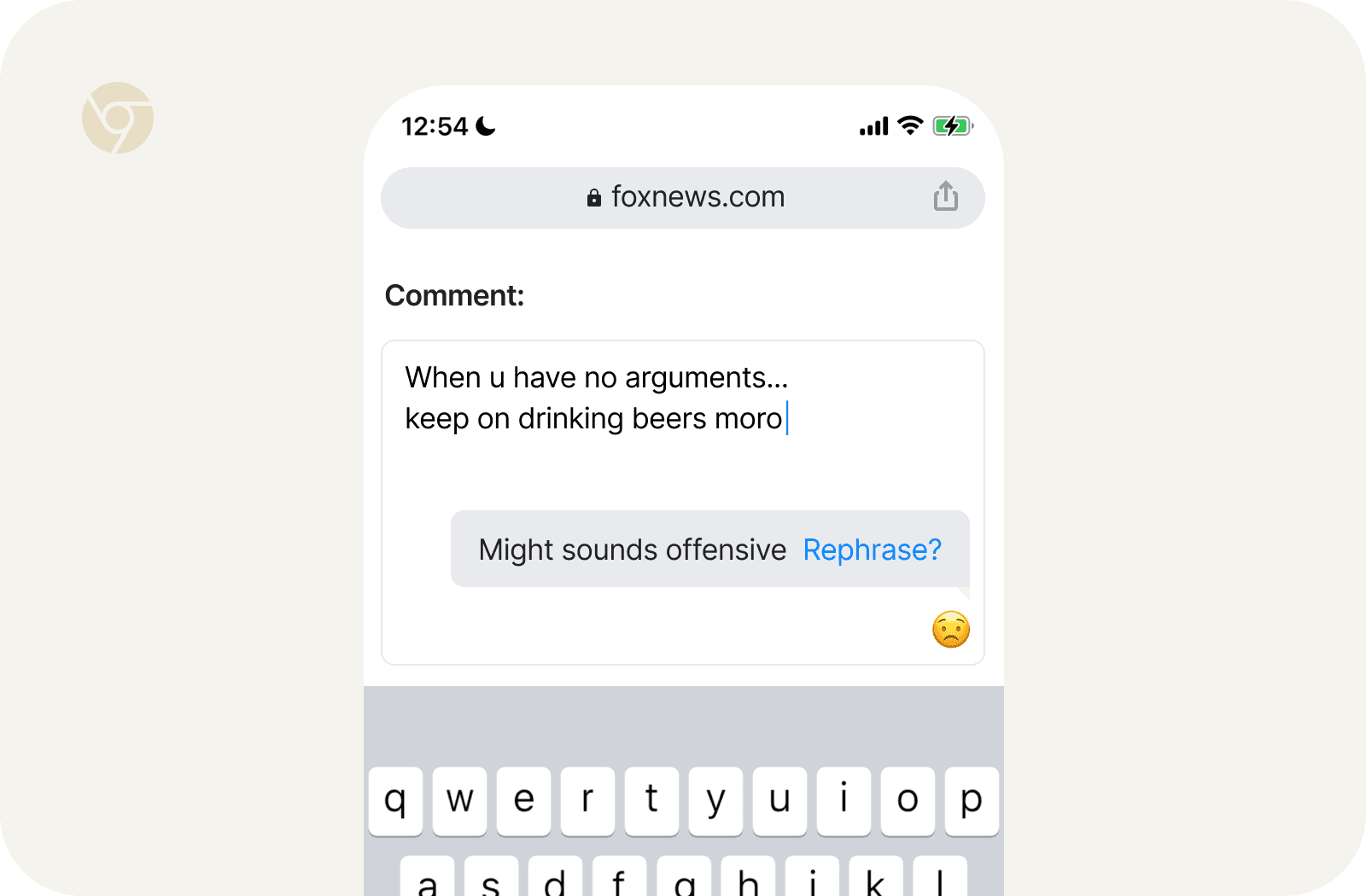

Message sentiment analysis while typing.

Automatically checking sentiment while typing a post or reply can help individuals in emotional states avoid posting hateful or inappropriate comments.

Indicating harmful speech detection.

Help people counter-speech effectively.

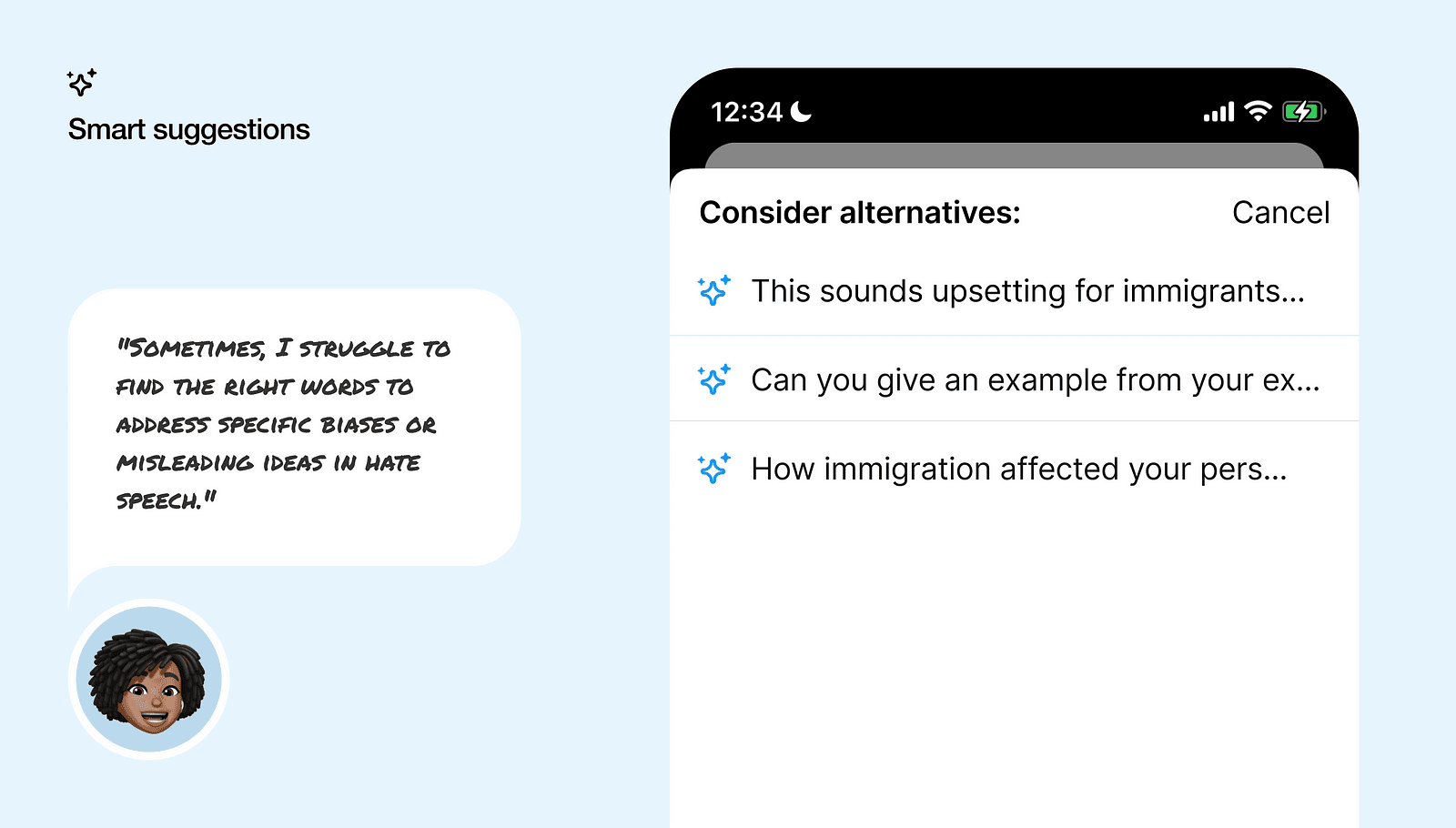

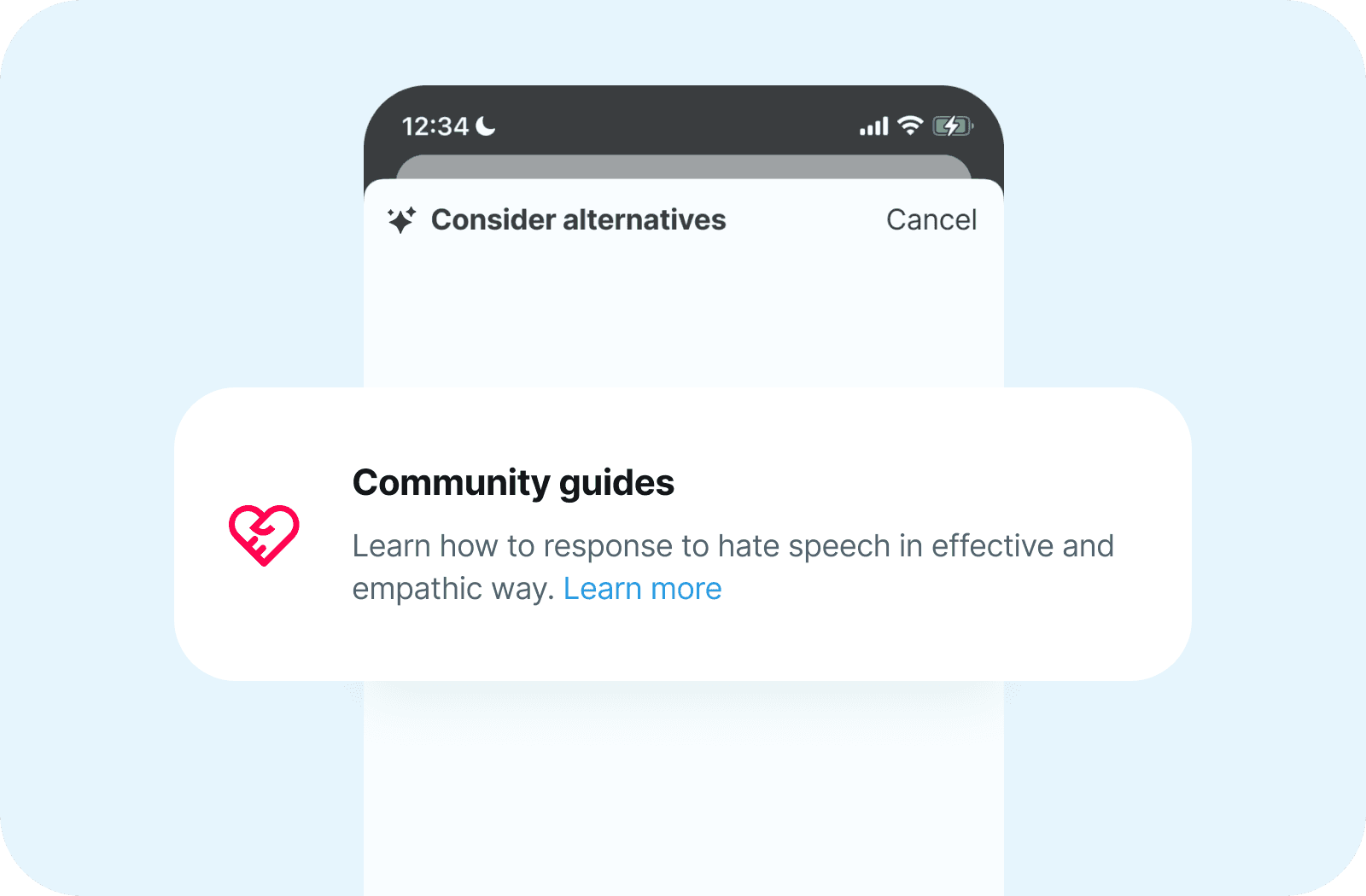

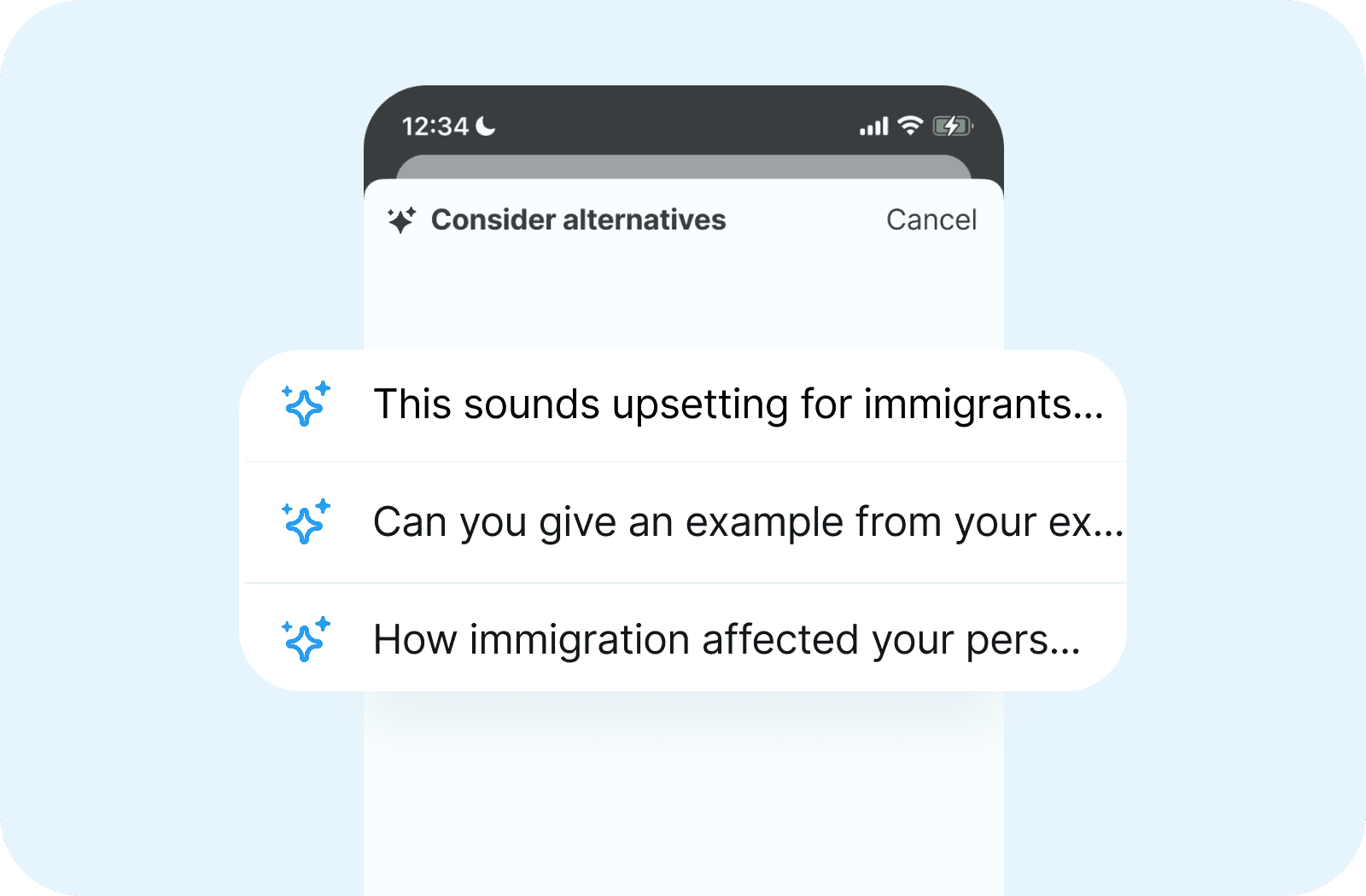

If there were tools to simplify and enhance responses to hateful posts or comments, more people might be encouraged to join the fight against hate speech.

Reply suggestions feature.

Social platforms offer resources and guidance to users encountering individuals with self-harm and suicidal thoughts [30], yet similar support is not extended for instances of hate speech [31].

Clear guidance on engaging with hate speech.

By offering comprehensive guides and suggestions generated by (LLMs), social media platforms can help people know how to act when they face hate speech and engage effectively [32].

Smart response suggestions view.

Counter-speech beyond social platforms.

The use of counter-speech could be extended to modern browsers and operating systems (OS).

Browser integrations for commenting online on websites.

Browser settings or browser extensionBrowsers such as Safari, Chrome, and Brave could incorporate built-in features or extensions enabling users to self-check their communication on platforms beyond mainstream social media, including comments on tabloids and news websites.iOS and Android integrations for commenting through messaging apps.Mobile and desktop platformsiOS, along with its sensitive content feature, could introduce a function to flag hate speech in iMessage or while browsing the web in Safari. Users could control this feature directly from their device settings or through social platform preferences, utilizing portable LLMs for support. [33]Counter-speech app.To demonstrate the concept, I’ve constructed a custom ChatGPT. This version can assist users in self-checking their messages or crafting responses with empathy to hateful comments.Custom ChatGPT as a proof of concept.It uses UN guidelines for how to debate hate speech and knows human cognitive biases that are often present in hate speech.Case 1: Response to a hateful comment.You just need to provide the text you’re about to send or the hateful comment you would like to push back on.Case 2: Pre-checking personal messages before they are posted.Try DisagreeGPT →

Exploring the role of AI in mitigating hate speech on social media has revealed both significant challenges and notable opportunities. While the straightforward approach of content removal is crucial, it often doesn’t address deeper issues and can even lead to further divisiveness. AI offers more nuanced possibilities, such as improving detection and enabling empathetic counter-responses, which could help manage hate speech more effectively.As we move forward, it would be beneficial to explore how AI algorithms can better capture the nuances of language and cultural contexts — key for distinguishing harmful speech from benign. There’s also room to think about how we can establish ethical guidelines for using AI in these scenarios, balancing safety with respect for free expression. Encouraging cooperation among tech companies, researchers, and policymakers could lead to a more unified and transparent strategy. This collaborative approach might help in crafting technologies that not only tackle hate speech but also support a digital environment that’s inclusive and respectful.

References:

Links to materials that were used to prepare this article.

Effects of uncivil online comments on aggressive cognitions, emotions, and behavior

[4] — Percentage of teenagers in the United States who have encountered hate speech

[5] — Report: Hate crimes surged in most U.S. big cities in 2022

[7] — UK government Official Statistics: Hate crime, England and Wales, 2021 to 2022

[8] — Anti-refugee rhetoric in Germany on Facebook is correlated with physical attacks

[9] — The ripple effect. Covid-19 and the epidemic of online abuse

[10] — Social media blamed for rise in racial and transgender hate crime allegations.

[11] — Online content moderation. Current challenges in detecting hate speech, 2023

[12] — Actioned hate speech content items on Facebook worldwide from 2017 to 2023

[13] — Facebook Uses Deceptive Math to Hide Its Hate Speech Problem

[14] — Tech giants’ slowing progress on hate speech removals underscores need for law, says EC

[15] — Tech layoffs ravage the teams that fight online misinformation and hate speech

[16] — How tech platforms fuel U.S. political polarization and what government can do about it

[17] — Social media platforms have influenced polarization in politics

[18] — Office of the Spokesperson for the UN Secretary-General

[20] — Beyond the “Big Three”: Alternative platforms for online hate speech

[22] — On Gab, an Extremist-Friendly Site, Pittsburgh Shooting Suspect Aired His Hatred in Full

[27] — Hate speech — Causes and consequences in public space

[28] — The State of Global Emotions

[32] — NoToHate Fact Sheets